-

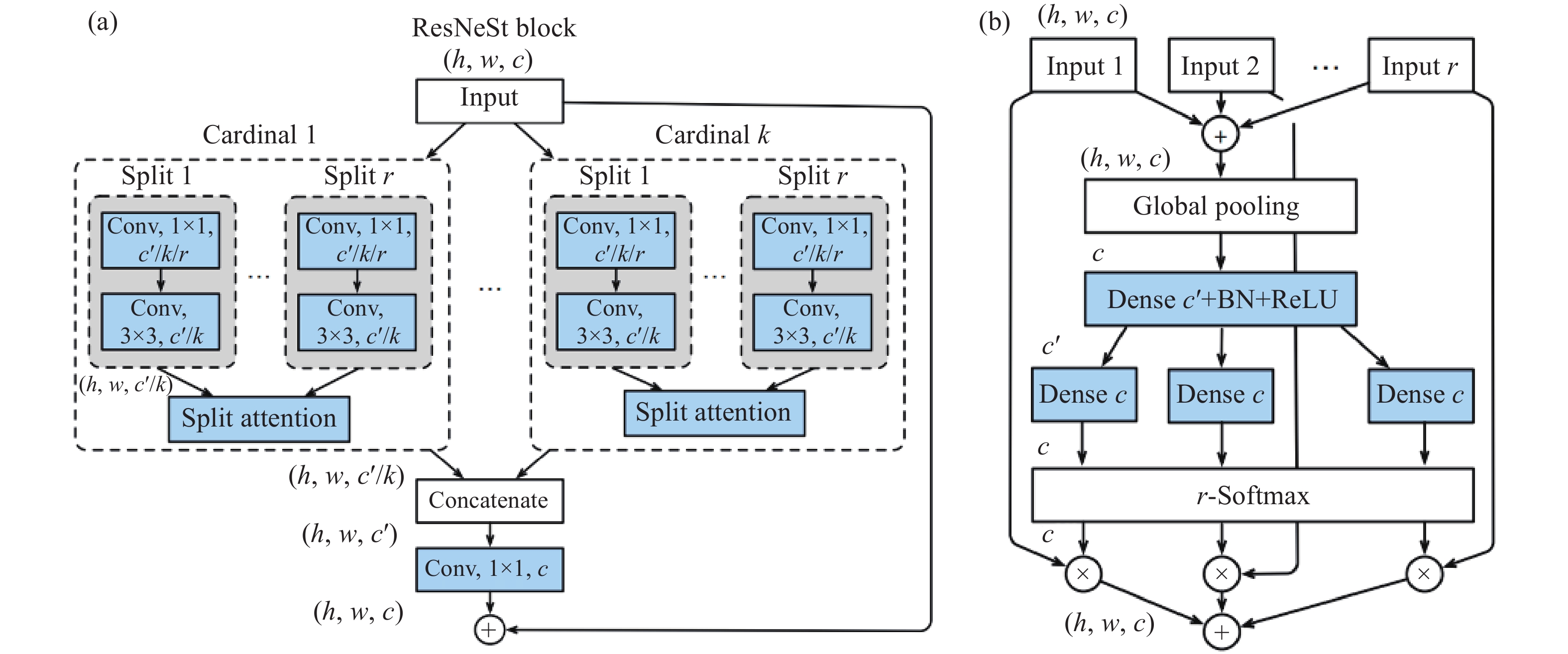

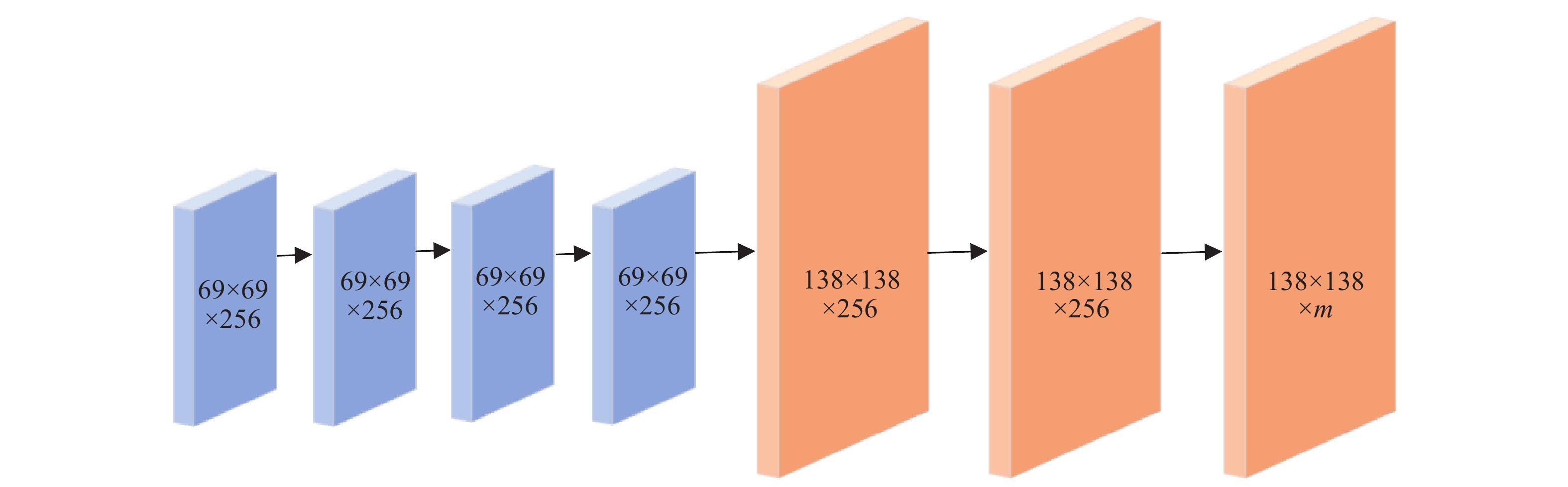

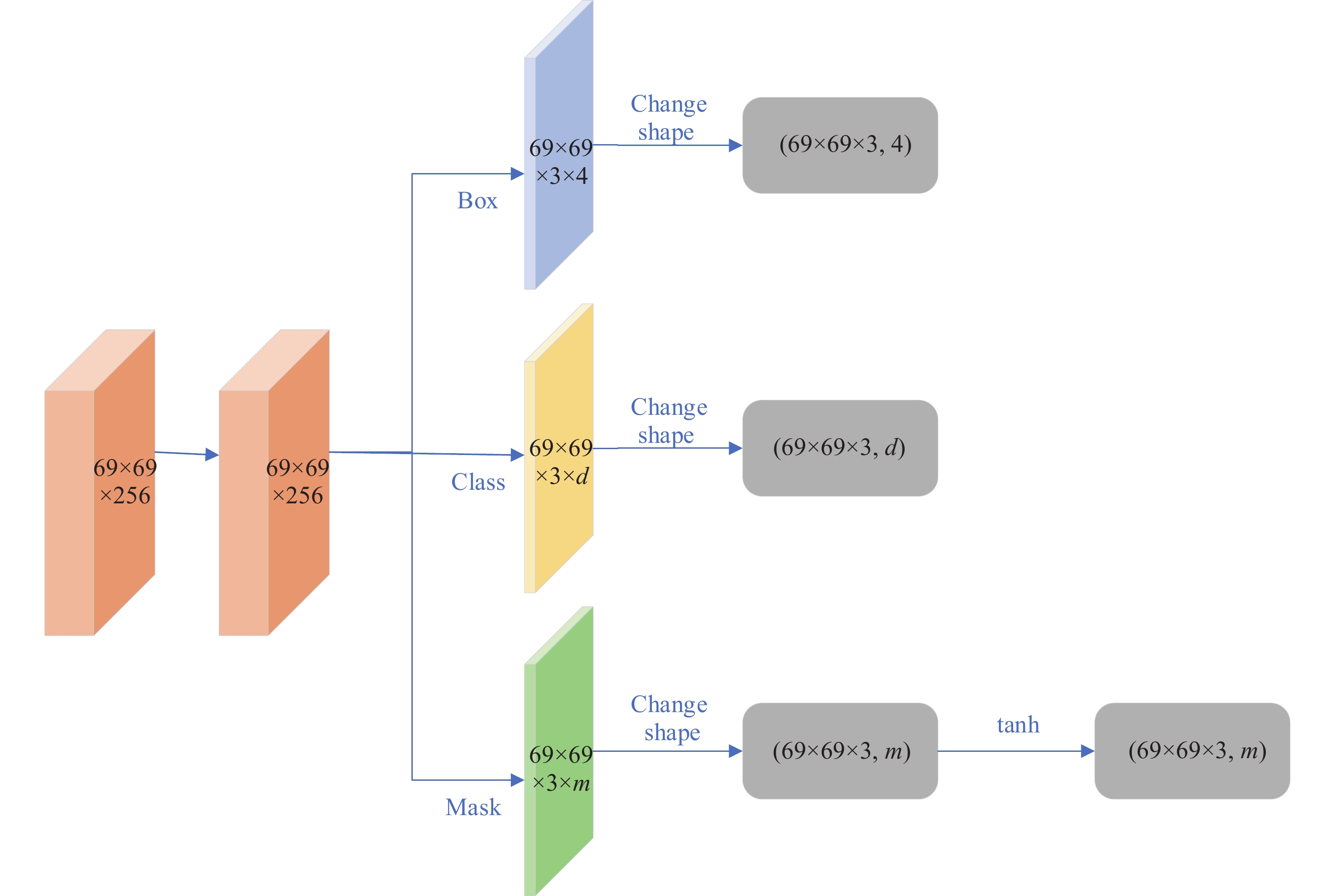

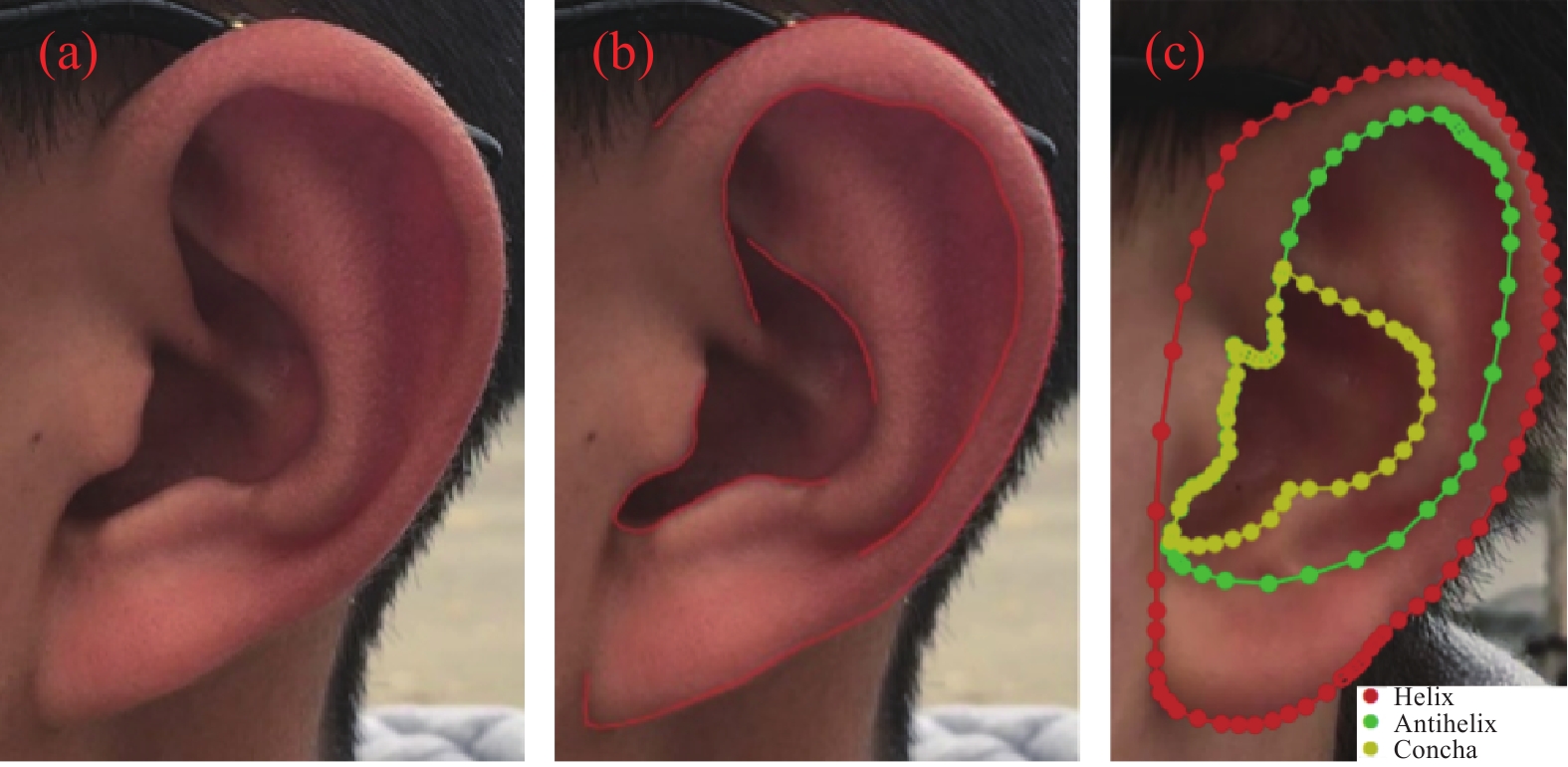

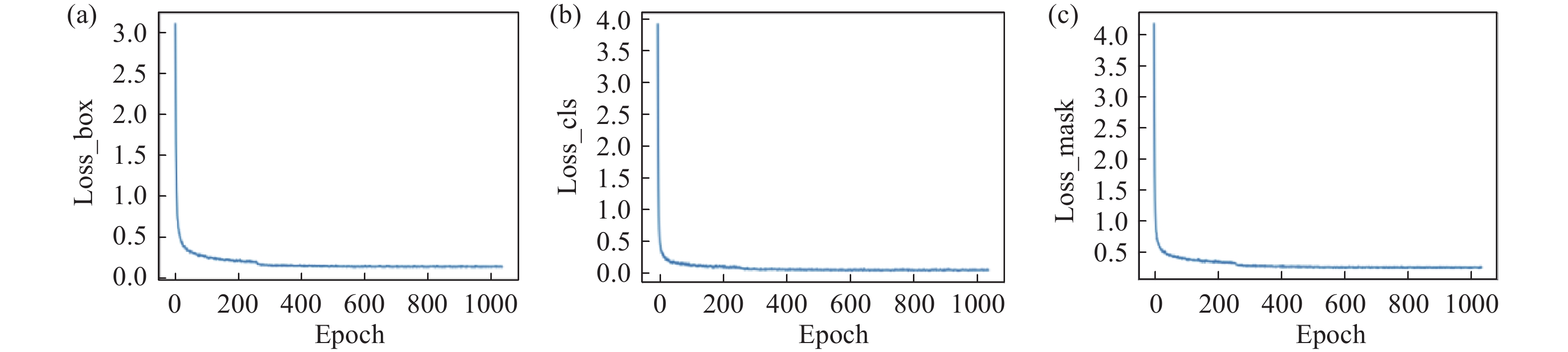

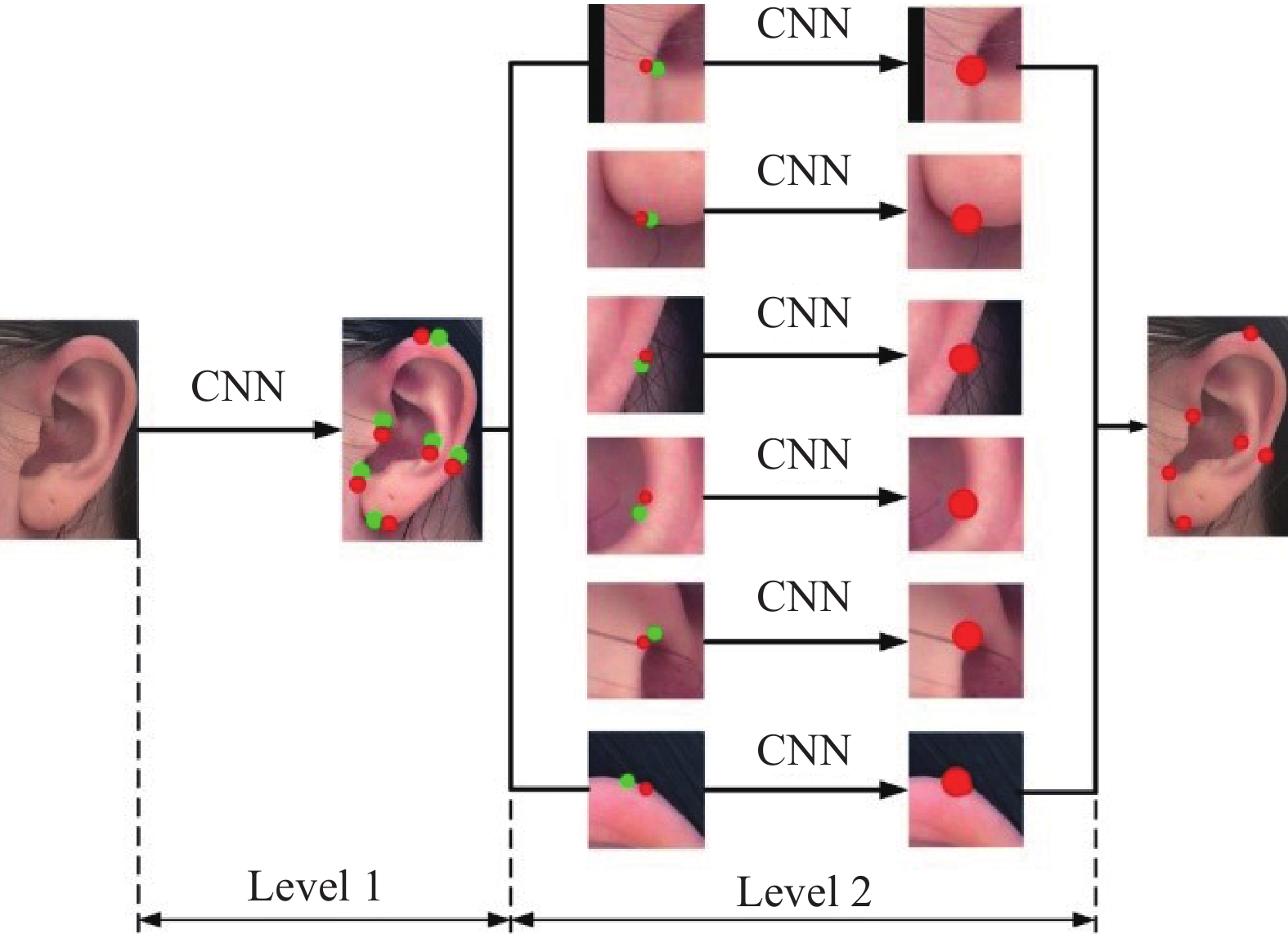

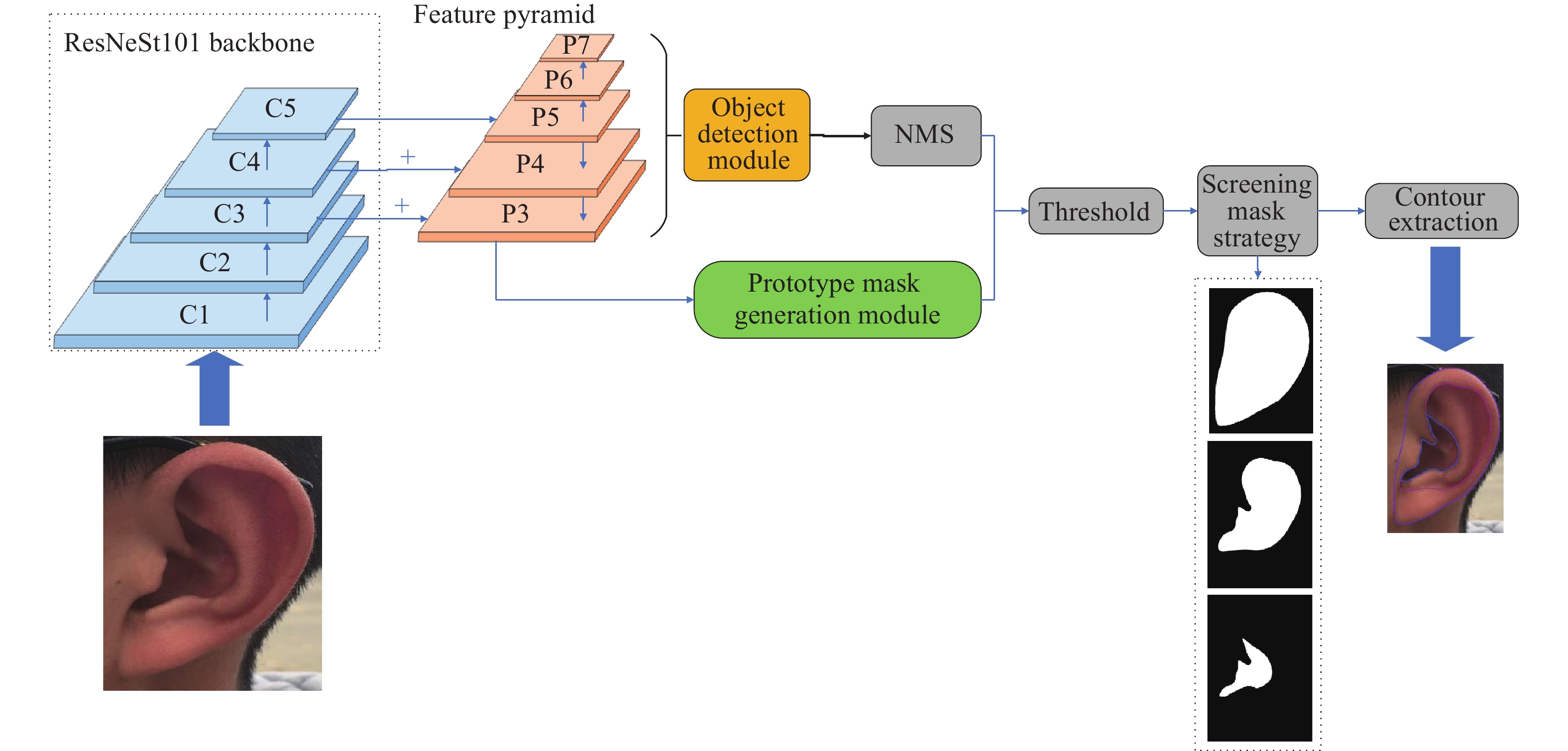

摘要: 在人耳形狀聚類、3D人耳建模、個人定制耳機等相關工作中,獲取人耳的一些關鍵生理曲線和關鍵點的準確位置非常重要。傳統的邊緣提取方法對光照和姿勢變化非常敏感。本文提出了一種基于ResNeSt和篩選模板策略的改進YOLACT實例分割網絡,分別從定位和分割兩方面對原始YOLACT算法進行改進,通過標注人耳數據集,訓練改進的YOLACT模型,并在預測階段使用改進的篩選模板策略,可以準確地分割人耳的不同區域并提取關鍵的生理曲線。相較于其他方法,本文方法在測試圖像集上顯示出更好的分割精度,且對人耳姿態變化時具有一定的魯棒性。Abstract: In related work, such as human ear shape clustering, three-dimensional human ear modeling, and personal customized headphones, the key physiological curves of the human ear and the accurate positions of key points need to be determined. Moreover, as an important biological feature, the morphological analysis and classification of the human ear are of considerable value for medical work related to the human ear. However, because of the complex morphological structure of the human ear, the generation of a general standard for the morphological structure of the human ear is difficult. This study divided the morphological structure of the human ear into three regions, namely, helix, antihelix, and concha, for instance segmentation and key physiological curve extraction. Traditional edge extraction methods are sensitive to illumination and posture variations. Moreover, the color distribution of one human ear image is relatively consistent. Thus, the transition among the three regions may not be obvious, which will cause poor adaptability for traditional edge extraction methods when extracting the key physiological curves of the human ear. To address this problem, this study proposed an improved YOLACT(You Only Look At CoefficienTs) instance segmentation model based on the ResNeSt backbone and the “screening mask” strategy, which improves the original YOLACT model from two aspects, namely, localization and segmentation. Our ResNeSt-based YOLACT model was trained with labeled ear images from the USTB-Helloear image set. In the prediction stage, the original cropping mask strategy was discarded and replaced with our proposed screening mask strategy to ensure the integrity of the edges of the segmentation area. These improvements enhance the accuracy of curve detection and extraction and can accurately segment different regions of the human ear and extract key physiological curves. Compared with other methods, our proposed method shows better segmentation accuracy on the test image set and is more robust to posture variations of the human ear.

-

Key words:

- human ear /

- physiological curve extraction /

- instance segmentation /

- improved YOLACT /

- ResNeSt

-

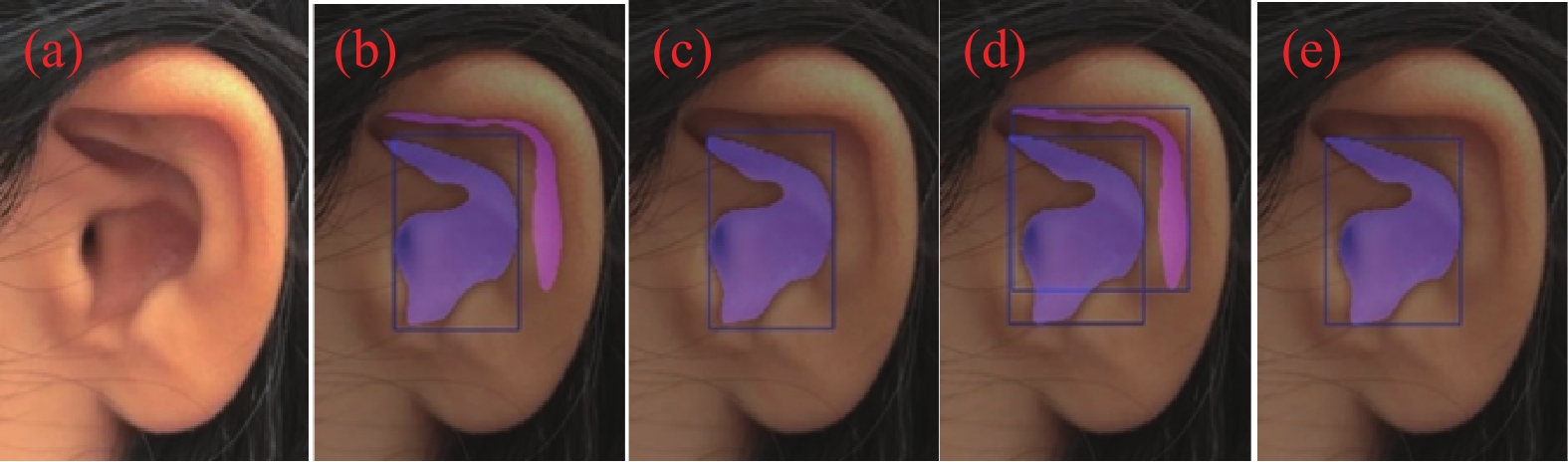

圖 5 模板處理. (a) 原圖; (b) 邊框和模板預測結果; (c) 裁剪模板結果; (d) 各區域外接矩形; (e) 篩選模板結果

Figure 5. Mask processing: (a) original image; (b) prediction of boxes and masks; (c) segmentation result with the cropping mask strategy; (d) bounding boxes of different regions; (e) segmentation result with the screening mask strategy

表 1 訓練超參數

Table 1. Training hyperparameters

max_size lr_steps max_iter batch_size 550 (30000, 60000, 90000) 120000 8 表 2 不同YOLACT模型的分割精度

Table 2. Segmentation accuracy of different YOLACT models

Model mIOU Dice coefficient YOLACT?ResNet101-crop 0.9514 0.9943 YOLACT?ResNet101-select 0.9518 0.9943 YOLACT?ResNet101-crop 0.9539 0.9950 YOLACT?ResNet101-select 0.9544 0.9950 表 3 YOLACT?ResNeSt101模型精度

Table 3. Accuracy of the YOLACT?ResNeSt101 model

% YOLACT?ResNeSt101 mAP_all mAP50 mAP70 mAP90 Box 95.14 100 100 96.63 Mask 98.13 100 100 97.98 表 4 模型改進前后提取關鍵曲線的準確率對比

Table 4. Comparison of curve extraction accuracy before and after model improvement

Model Accuracy YOLACT?ResNet101-crop 308/410 YOLACT?ResNet101-select 381/410 YOLACT?ResNeSt101-crop 344/410 YOLACT?ResNeSt101-select 395/410 表 5 模型改進前后實時性對比

Table 5. Real-time performance before and after model improvement

Model FPS Time/s YOLACT?ResNet101-crop 24.6 16.6 YOLACT?ResNet101-select 24.8 16.5 YOLACT?ResNeSt101-crop 16.6 24.6 YOLACT?ResNeSt101-select 16.8 24.4 中文字幕在线观看表 6 不同網絡模型分割精度比較

Table 6. Accuracy comparison of different segmentation models

Model mIOU DeepLabV3+ 0.8853 Improved YOLACT 0.9544 -

參考文獻

[1] Yang Y R, Wu H B. Anatomical study of auricle. Chin J Anat, 1988, 11(1): 56楊月如, 吳紅斌. 耳廓的解剖學研究. 解剖學雜志, 1988, 11(1):56 [2] Qi N, Li L, Zhao W. Morphometry and classification of Chinese adult's auricles. Tech Acoust, 2010, 29(5): 518齊娜, 李莉, 趙偉. 中國成年人耳廓形態測量及分類. 聲學技術, 2010, 29(5):518 [3] Azaria R, Adler N, Silfen R, et al. Morphometry of the adult human earlobe: A study of 547 subjects and clinical application. Plast Reconstr Surg, 2003, 111(7): 2398 doi: 10.1097/01.PRS.0000060995.99380.DE [4] Ronneberger O, Fischer P, Brox T. U-net: Convolutional networks for biomedical image segmentation // Lecture Notes in Computer Science. Munich, 2015: 234 [5] Milletari F, Navab N, Ahmadi S A. V-net: Fully convolutional neural networks for volumetric medical image segmentation // 2016 Fourth International Conference on 3D Vision (3DV). Stanford, 2016: 565 [6] Noh H, Hong S, Han B. Learning deconvolution network for semantic segmentation // 2015 IEEE International Conference on Computer Vision (ICCV). Santiago, 2015: 1520 [7] Zhao H S, Shi J P, Qi X J, et al. Pyramid scene parsing network // 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu, 2017: 6230 [8] Chen L C, Papandreou G, Schroff F, et al. Rethinking atrous convolution for semantic image segmentation // Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Long Beach, 2017: 2536 [9] Wang Z M, Liu Z H, Huang Y K, et al. Efficient wagon number recognition based on deep learning. Chin J Eng, 2020, 42(11): 1525王志明, 劉志輝, 黃洋科, 等. 基于深度學習的高效火車號識別. 工程科學學報, 2020, 42(11):1525 [10] Chen X L, Girshick R, He K M, et al. TensorMask: A foundation for dense object segmentation // 2019 IEEE/CVF International Conference on Computer Vision (ICCV). Seoul, 2019: 2061 [11] Dai J F, He K M, Sun J. Instance-aware semantic segmentation via multi-task network cascades // 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Las Vegas, 2016: 3150 [12] Li Y, Qi H Z, Dai J F, et al. Fully convolutional instance-aware semantic segmentation // 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu, 2017: 4438 [13] Bolya D, Zhou C, Xiao F Y, et al. YOLACT: real-time instance segmentation // 2019 IEEE/CVF International Conference on Computer Vision (ICCV). Seoul, 2019: 9156 [14] He K M, Gkioxari G, Dollár P, et al. Mask R-CNN // 2017 IEEE International Conference on Computer Vision (ICCV). Venice, 2017: 2980 [15] Ren S Q, He K M, Girshick R, et al. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans Pattern Anal Mach Intell, 2017, 39(6): 1137 doi: 10.1109/TPAMI.2016.2577031 [16] Cai Z W, Vasconcelos N. Cascade R-CNN: Delving into high quality object detection // 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, 2018: 6154 [17] Qin Z, Li Z M, Zhang Z N, et al. ThunderNet: towards real-time generic object detection on mobile devices // 2019 IEEE/CVF International Conference on Computer Vision (ICCV). Seoul, 2019: 6717 [18] He K M, Zhang X Y, Ren S Q, et al. Deep residual learning for image recognition // 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Las Vegas, 2016: 770 [19] Zhang H, Wu C R, Zhang Z Y, et al. ResNeSt: Split-attention networks [J/OL]. ArXiv Preprint (2020-04-19) [2020-12-31].https://arxiv.org/abs/2004.08955 [20] Lin T Y, Dollár P, Girshick R, et al. Feature pyramid networks for object detection // 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu, 2017: 936 [21] Xie S N, Girshick R, Dollár P, et al. Aggregated residual transformations for deep neural networks // 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). Honolulu, 2017: 5987 [22] Hu J, Shen L, Sun G. Squeeze-and-excitation networks // 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. Salt Lake City, 2018: 7132 [23] Li X, Wang W H, Hu X L, et al. Selective kernel networks // 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Long Beach, 2019: 510 [24] He T, Zhang Z, Zhang H, et al. Bag of tricks for image classification with convolutional neural networks // 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). Long Beach, 2019: 558 [25] Shelhamer E, Long J, Darrell T. Fully convolutional networks for semantic segmentation. IEEE Trans Pattern Anal Mach Intell, 2017, 39(4): 640 doi: 10.1109/TPAMI.2016.2572683 [26] Liu W, Anguelov D, Erhan D, et al. SSD: single shot MultiBox detector // Computer Vision – ECCV 2016. Amsterdam, 2016: 21 [27] Lin T Y, Maire M, Belongie S, et al. Microsoft COCO: common objects in context // Computer Vision – ECCV 2014. Zurich, 2014: 740 [28] Zhang Y, Mu Z C, Yuan L, et al. USTB-helloear: A large database of ear images photographed under uncontrolled conditions // International Conference on Image and Graphics. Shanghai, 2017: 405 [29] Yuan L, Zhao H N, Zhang Y, et al. Ear alignment based on convolutional neural network // Chinese Conference on Biometric Recognition. Urumqi, 2018: 562 -

下載:

下載: